How Can We Stop Algorithm Bias?

We need to identify protections that ensure consumers are safeguarded from algorithmic decisions that go wrong.

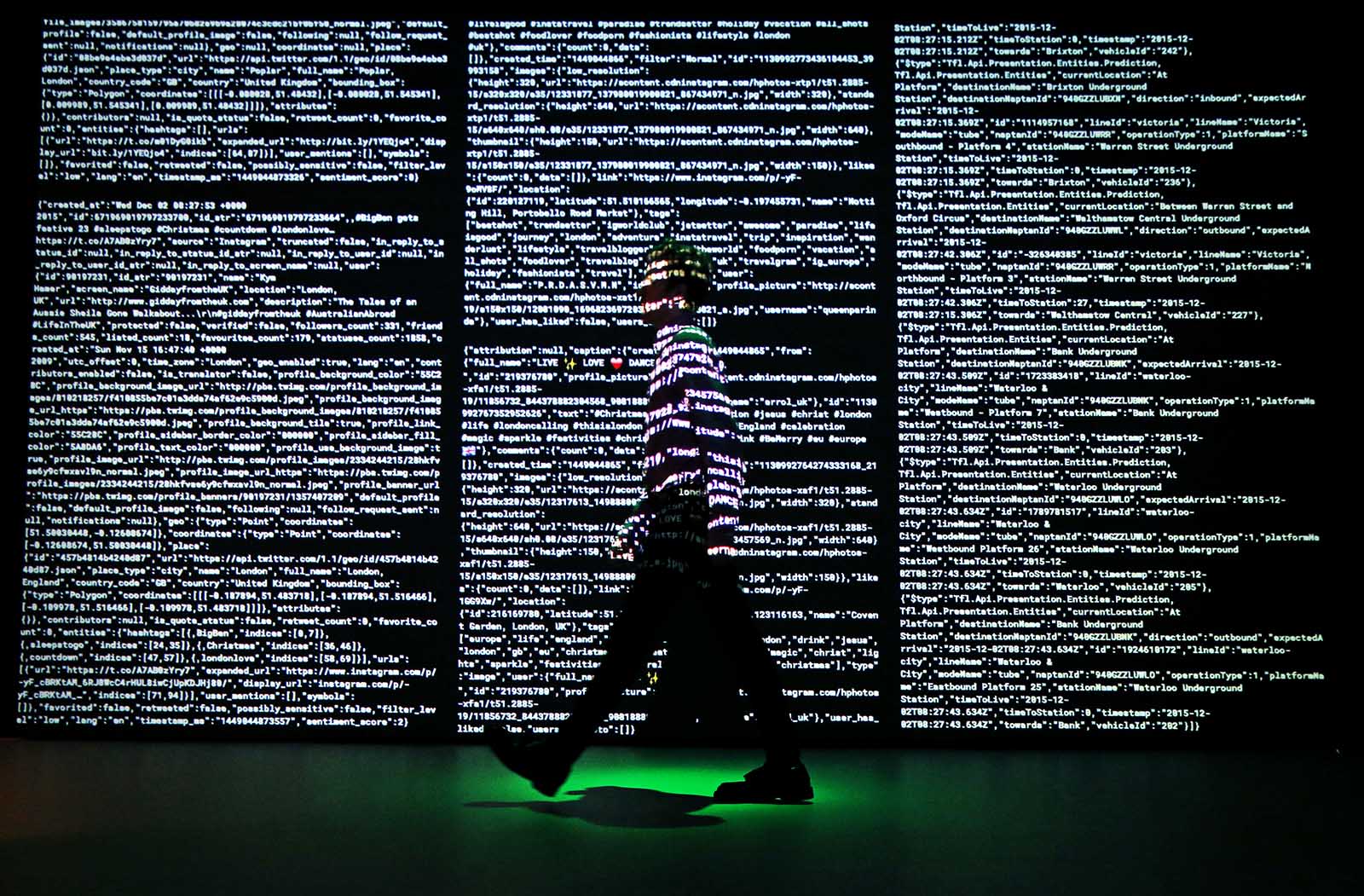

Photo: Peter Macdiarmid/Getty Images for Somerset House

Algorithms are becoming increasingly embedded in our daily lives and making more and more decisions on our behalf. Kartik Hosanagar is a professor at the Wharton School of the University of Pennsylvania. In his new book, A Human’s Guide to Machine Intelligence, professor Hosanagar makes the case that we need to arm ourselves with a more nuanced understanding of the phenomenon of algorithmic thinking, pointing out that algorithms often think a lot like their creators, i.e., you and me.

BRINK spoke to him about how algorithms are impacting our daily lives.

Kartik Hosanagar: Algorithms are now all around us. Think about Amazon’s “people who bought this also bought that” type of recommendations. Algorithms on apps like Tinder and others are picking who we should meet and who we should date and so on. These systems have a very big impact on choices we make. A study by data scientists at Netflix suggested that 80% of viewing hours on Netflix originate from automated recommendations.

Similarly, a third of the choices on Amazon product purchases are driven by algorithmic recommendations. And Facebook and Twitter algorithms figure out which news stories we get exposed to.

And they’re starting to enter areas where they’re making life and death decisions or very important decisions such as driving our cars, investing our savings — or even helping doctors and making diagnostic decisions. Some help judges make sentencing decisions and so on. So their impact is increasing.

Bias in the Code

BRINK: And how easy is it for algorithms to be tricked or to provide a misleading decision or data?

Mr. Hosanagar: Well, a lot of us have this notion of algorithms as being rational, infallible decision-makers. But the evidence suggests that they are prone to the same kinds of issues we see with human decision-making. For example, there’ve been examples of sentencing algorithms that are guiding judges in courtrooms that have race bias. There are examples of resume-screening algorithms used by HR professionals that have gender bias.

There was a famous flash crash in the U.S. stock market that was driven in part by algorithmic trading. So yes, they do go wrong.

BRINK: And what lies behind these incidents? Is that bad coding, or is it bad data that the algorithms are given?

Mr. Hosanagar: It used to be the case that some of these issues were driven by bad code and that was because most of what algorithms did was determined by a developer or a programmer.

What has happened in recent years is that instead of a developer writing the code of how to diagnose a disease let’s say, now we feed these algorithms lots of data, such as the records of millions of patients. And we let the system analyze the data and find patterns in there.

So if there are biases in the data because of biases in past decisions made by humans, then the algorithm picks them up. With job applicants, the algorithm looks at the data and says, let me find the kind of people who tend to get hired, or who tend to get promoted. And if there was a gender bias, then the algorithm will likely reject the women applicants at a higher rate, even if their qualifications were similar.

Just Getting Started

BRINK: Do you see any end point here? Or do you think that we could get to a point where companies are experiencing diminishing returns in their AI investments?

Mr. Hosanagar: I think in terms of the impact that algorithms are having on our lives and on choices we make or others make about us, we’re only getting started.

As powerful companies roll out advanced AI and algorithms, it’s important to identify protections that ensure consumers are safeguarded from algorithmic decisions that go wrong.

Today, most algorithms play a decision-support role, like Netflix suggesting you should watch this or Amazon suggesting you should buy this, but they will become increasingly autonomous, and they will be making more and more decisions for us.

So, for example, instead of Google maps suggesting a route for us, there’s going to be a driverless car that looks at the map and decides how we get from point A to point B. There will be algorithms that are completely automating investment decisions that are guiding our diagnostic decisions in hospitals and so on.

Easier to Correct Bias in Code Than in Humans

I don’t see an obvious end point where we say, “hey, let’s stop using algorithms,” because the value they offer is quite tremendous. Which of us wants to go back to an old world where anytime you want to watch something you ask a couple friends and your choice set is limited to only what they know? The value they provide is immense, but at the same time, they can go wrong.

But the flip side is that it’s much easier in the long run to fix and correct biases in algorithmic decisions than in human decisions. Correcting gender or race bias in humans is incredibly hard.

Once we have detected bias with an algorithm, we can put in checks and balances and avoid issues. So the focus should not be on running away from algorithms, but on recognizing that they are not infallible and making sure that, before they’re deployed — especially in socially consequential settings like recruiting, loan approvals, criminal sentencing or medicine — they’ve been subjected to certain checks and tested appropriately before being rolled out.

BRINK: In your book, you have this idea of a digital bill of rights for humans when interacting with these algorithms. Explain what lies behind it.

A Bill of Digital Rights

Mr. Hosanagar: We are in an era where large, powerful companies are rapidly rolling out advanced AI and algorithms and making many decisions that affect how we live and work. But it’s important to identify protections that ensure consumers are safeguarded from algorithmic decisions that go wrong. So I propose a bill of rights, which has four main pillars:

One is a right to a description of the kinds of data that are used to train the algorithms. And so we know, for example, that if there’s a resume-screening algorithm, did it actually look at our social media posts? It’s useful to know that.

The second one is the right to an explanation regarding the procedures or the logic behind the algorithm. And that could be as simple as saying, “hey, you applied for a loan, and your loan was rejected, and the most important reasons involve your employment status or your education.” Just knowing that is useful because then you know when biases creep in. For example, if the algorithm makes a decision, and one of the factors is gender or race or your zip code or your address, it’s useful to know that.

The third pillar I suggest is that user search should have some level of control on the way algorithms work and make decisions for them. Just having some basic feedback loop from the user to the algorithm is helpful.

Today, Facebook has a simple feature in its NewsFeed algorithm where users can flag a post and say, “this is offensive content” or “this is false news.” And if enough people complain, that triggers a human review or a more careful review. I advocate such a feedback loop.

The last pillar is that when an algorithm is used for socially consequential decisions like recruiting, loan approval and so on, then there should be some formal audit process.

Most tech companies have no audit process at all. The engineer who develops the algorithm often tests it and then rolls it out. And that’s not enough in those kinds of settings. There has to be an audit process with either an independent team within the company or a third party auditor.