Stepping Up to the Challenges of the Fourth Industrial Revolution

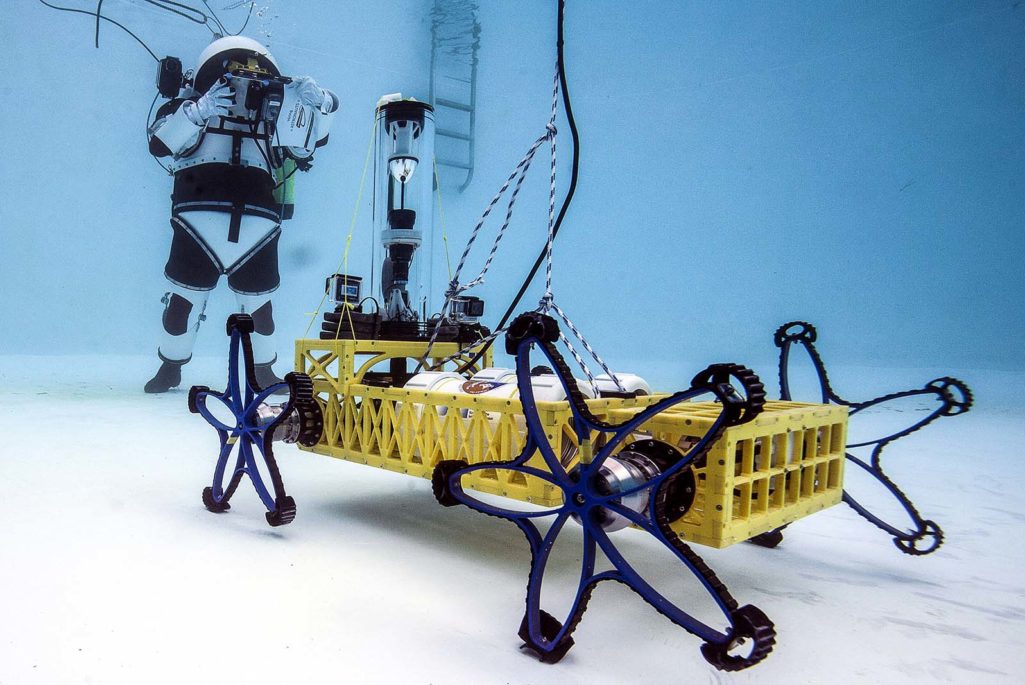

A Comex diver wearing a Gandolfi space suit undertakes training procedures in a swimming pool. The diver uses a computer underwater to drive the robotic vehicle 'Yemo,' by the German Research Center for Artificial Intelligence to test all transmissions. This training session is in preparation for operations on the Moon or Mars.

Photo: Boris Horvat/AFP/Getty Images

Managing the risks and challenges presented by the emergence of the Fourth Industrial Revolution (4IR) was the central theme of the 2016 World Economic Forum (WEF) that took place a year ago in Davos, Switzerland. The theme persisted during this year’s forum. And mitigating the risks of 4IR is a top challenge in the recently published Global Risks Report.

Over the past year, Klaus Schwab, WEF founder and executive chair, has described his vision for the Fourth Industrial Revolution in a number of articles as well as a book. He positions the 4IR within the historical context of three previous industrial revolutions, the first driven by steam and water power, the second by steel, oil, electricity and mass production and the third by the dawn of the digital age.

The 4IR builds on our ongoing digital revolution. It “entails nothing less than a transformation of humankind,” Schwab wrote in his book’s introduction. “We are at the beginning of a revolution that is fundamentally changing the way we live, work, and relate to one another.”

Schwab continues: “[T]hink about the staggering confluence of emerging technology breakthroughs, covering wide-ranging fields such as artificial intelligence (AI), robotics, the internet of things (IoT), autonomous vehicles, 3D printing, nanotechnology, biotechnology, materials science, energy storage and quantum computing, to name a few. Many of these innovations are in their infancy, but they are already reaching an inflection point in their development as they build on and amplify each other in a fusion of technologies across the physical, digital and biological worlds.”

Along with their many benefits, technological revolutions have also been highly disruptive. The technologies of the 4IR will help us address some of our most pressing 21st century problem areas, including health, education, energy, economic inclusion and the environment. But we must also identify the new global risks created by the 4IR, which, if not properly addressed, could potentially threaten our well-being.

We must exploit the opportunities while mitigating the risks. “The extent to which the benefits are maximized and the risks mitigated will depend on the quality of governance — the rules, norms, standards, incentives, institutions, and other mechanisms that shape the development and deployment of each particular technology,” the Global Risks Report 2017 said. It’s important to strike the right balance. Overly strict restrictions can delay potential benefits, while lax governance can lead to irresponsible use and a loss of public confidence.

To better understand the 4IR risk landscape, the WEF conducted a Global Risk Perception Survey (GRPS), in which it asked respondents to assess both the positive benefits and negative risks of 12 emerging technologies. Artificial intelligence and robotics stood out, getting the highest risk scores, as well as some of the highest positive benefits scores.

AI will transform job markets, so policymakers should support AI for its benefits while addressing its disruptions.

Unlike biotechnology, which also got high benefits and high risks scores, AI is lightly regulated, most likely because it has only reached a tipping point of applications and market acceptance in the past few years. But, given the risks involved, the AI research community and government policymakers have recently argued for the need to strengthen the governance of AI.

One of the best discussions of the challenges of bleeding-edge AI systems is a 2015 article on the Benefits and Risks of Artificial Intelligence by Tom Dietterich and Eric Horvitz, current and former president, respectively, of The Association for the Advancement of AI. They list three major risks that we must pay close attention to:

Complexity of AI software: “The study of the ‘verification’ of the behavior of software systems is challenging and critical, and much progress has been made. However, the growing complexity of AI systems and their enlistment in high-stakes roles, such as controlling automobiles, surgical robots, and weapons systems, means that we must redouble our efforts in software quality.”

Cyberattacks: “AI algorithms are no different from other software in terms of their vulnerability to cyberattack. But because AI algorithms are being asked to make high-stakes decisions, such as driving cars and controlling robots, the impact of successful cyberattacks on AI systems could be much more devastating than attacks in the past. … Before we put AI algorithms in control of high-stakes decisions, we must be much more confident that these systems can survive large scale cyberattacks.”

The Sorcerer’s Apprentice. We must ensure that our AI systems do what we want them to do. “In addition to relying on internal mechanisms to ensure proper behavior, AI systems need to have the capability—and responsibility—of working with people to obtain feedback and guidance. They must know when to stop and ‘ask for directions’—and always be open for feedback.”

To help us better understand and manage these critical challenges, two years ago, Horvitz spearheaded the launch of AI100 at Stanford University, “a 100-year effort to study and anticipate how the effects of artificial intelligence will ripple through every aspect of how people work, live and play.” One of its key activities is to convene a study panel of experts every five years to assess the then current state of the field, as well as to explore both the technical advances and societal challenges over the next 10 to 15 years.

A few months ago the first such panel published Artificial Intelligence and Life in 2030, a report that examines the likely impact of AI on a typical North American city by the year 2030. “Contrary to the more fantastic predictions for AI in the popular press, the Study Panel found no cause for concern that AI is an imminent threat to humankind,” the report’s executive summary said. “No machines with self-sustaining long-term goals and intent have been developed, nor are they likely to be developed in the near future. Instead, increasingly useful applications of AI, with potentially profound positive impacts on our society and economy are likely to emerge between now and 2030.” The summary goes on to say that many of these early AI efforts “will spur disruptions in how human labor is augmented … creating new challenges for the economy and society more broadly.”

Finally, in the last weeks of 2016, the Obama administration released “Artificial Intelligence, Automation, and the Economy,” a report that analyzes how AI will transform job markets over time, and proposed policy responses to address AI’s impact on the U.S. economy. Overall, the report recommends that policymakers should support AI for its many likely benefits while addressing those disruptions that might affect the livelihoods of millions. These include:

Technology and productivity growth. As has been the case with past technology innovations, AI should make the economy more efficient and lead to productivity growth and higher standards of living. Such an AI productivity boost is particularly important, given that productivity has significantly slowed down over the past decade in the U.S. and other advanced economies.

Uneven distribution of impact across sectors, job types, wage levels, skills and education. It’s very hard to predict which jobs will be most affected by AI-driven automation. Like IT, AI is a collection of technologies with varying impact on different tasks.

Technology is not destiny—institutions and policies are critical. Policy plays a large role in shaping the direction and effects of technological change. “Given appropriate attention and the right policy and institutional responses, advanced automation can be compatible with productivity, high levels of employment, and more broadly shared prosperity,” the Obama administration report said.

AI and robotics are but one of several emerging technologies that will profoundly impact the Fourth Industrial Revolution. But, as Schwab reminds us in his book, “Technology is not an exogenous force over which we have no control. We are not constrained by a binary choice between ‘accept and live with it’ and ‘reject and live without it’. Instead, take dramatic technological change as an invitation to reflect about who we are and how we see the world.”